Data Types While Reading Csv in Pandas

Pandas read_csv() tricks you should know to speed up your data analysis

Some of the most helpful Pandas tricks to speed upwards your data analysis

![]()

Importing information is the first step in any data science project. Oftentimes, you'll work with information in CSV files and come across problems at the very beginning. In this commodity, you'll run into how to use the Pandas read_csv() part to deal with the following common problems.

- Dealing with different graphic symbol encodings

- Dealing with headers

- Dealing with columns

- Parsing date columns

- Setting information blazon for columns

- Finding and locating invalid value

- Appending data to an existing CSV file

- Loading a huge CSV file with

chunksize

Please check out my Github repo for the source lawmaking.

i. Dealing with different grapheme encodings

Character encodings are specific sets of rules for mapping from raw binary byte strings to characters that brand upward the human-readable text [ane]. Python has congenital-in support for a listing of standard encodings.

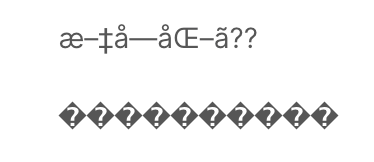

Character due east n coding mismatches are less common today as UTF-8 is the standard text encoding in most of the programming languages including Python. Still, information technology is definitely still a problem if you are trying to read a file with a different encoding than the i it was originally written. You are about probable to end upward with something like beneath or DecodeError when that happens:

The Pandas read_csv() role has an statement call encoding that allows you to specify an encoding to use when reading a file.

Let'due south have a look at an example below:

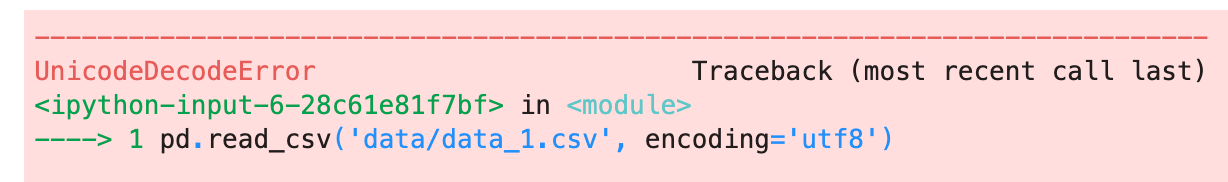

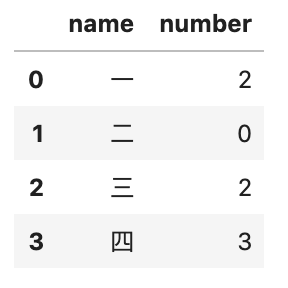

First, nosotros create a DataFrame with some Chinese characters and save information technology with encoding='gb2312' .

df = pd.DataFrame({'name': '一 二 三 四'.dissever(), 'northward': [2, 0, 2, 3]}) df.to_csv('data/data_1.csv', encoding='gb2312', index=False)

Then, you should get an UnicodeDecodeError when trying to read the file with the default utf8 encoding.

# Read information technology with default encoding='utf8'

# Y'all should get an error

pd.read_csv('information/data_1.csv')

In order to read information technology correctly, you should pass the encoding that the file was written.

pd.read_csv('data/data_1.csv', encoding='gb2312')

2. Dealing with headers

Headers refer to the column names. For some datasets, the headers may be completely missing, or y'all might want to consider a dissimilar row equally headers. The read_csv() office has an argument called header that allows you lot to specify the headers to use.

No headers

If your CSV file does not have headers, then you need to set the argument header to None and the Pandas will generate some integer values as headers

For instance to import data_2_no_headers.csv

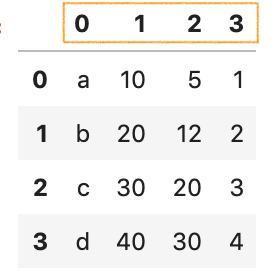

pd.read_csv('data/data_2_no_headers.csv', header=None)

Consider dissimilar row as headers

Allow's take a look at data_2.csv

x1, x2, x3, x4

product, cost, toll, profit

a, 10, 5, 1

b, 20, 12, 2

c, 30, 20, 3

d, twoscore, xxx, 4 It seems like more sensible columns proper name would be product, cost, … turn a profit, just they are non in the commencement row. The argument header also allows you to specify the row number to apply as the column names and the kickoff of data. In this case, we would like to skip the starting time row and use the 2nd row as headers:

pd.read_csv('data/data_2.csv', header=i) 3. Dealing with columns

When your input dataset contains a large number of columns, and you desire to load a subset of those columns into a DataFrame, then usecols will be very useful.

Functioning-wise, it is better considering instead of loading an entire DataFrame into memory so deleting the spare columns, we tin can select the columns we need while loading the dataset.

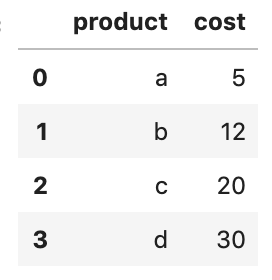

Let's use the same dataset data_2.csv and select the product and cost columns.

pd.read_csv('information/data_2.csv',

header=1,

usecols=['product', 'cost'])

We can also pass the column index to usecols:

pd.read_csv('information/data_2.csv',

header=1,

usecols=[0, 1]) iv. Parsing date columns

Date columns are represented as objects past default when loading data from a CSV file.

df = pd.read_csv('information/data_3.csv')

df.info() RangeIndex: four entries, 0 to 3

Data columns (total 5 columns):

# Column Non-Nil Count Dtype

--- ------ -------------- -----

0 date 4 not-null object

i production four not-null object

2 cost 4 not-goose egg int64

3 price four not-null int64

4 profit 4 non-null int64

dtypes: int64(3), object(ii)

retention usage: 288.0+ bytes

To read the appointment column correctly, we can use the argument parse_dates to specify a list of date columns.

df = pd.read_csv('data/data_3.csv', parse_dates=['date'])

df.info() <class 'pandas.core.frame.DataFrame'>

RangeIndex: 4 entries, 0 to 3

Data columns (full 5 columns):

# Cavalcade Non-Zippo Count Dtype

--- ------ -------------- -----

0 date iv non-zippo datetime64[ns]

1 product 4 non-null object

2 price 4 non-null int64

3 price four non-null int64

iv profit four not-zip int64

dtypes: datetime64[ns](one), int64(3), object(1)

memory usage: 288.0+ bytes

Sometime appointment is split up into multiple columns, for example, year , month , and day . To combine them into a datetime, nosotros can pass a nested listing to parse_dates.

df = pd.read_csv('data/data_4.csv',

parse_dates=[['twelvemonth', 'calendar month', 'twenty-four hours']])

df.info() RangeIndex: 4 entries, 0 to 3

Data columns (total 5 columns):

# Cavalcade Not-Nada Count Dtype

--- ------ -------------- -----

0 year_month_day 4 non-null datetime64[ns]

1 production 4 non-null object

two price 4 non-null int64

iii cost 4 non-null int64

4 profit 4 non-zippo int64

dtypes: datetime64[ns](ane), int64(3), object(1)

memory usage: 288.0+ bytes

To specify a custom cavalcade name instead of the auto-generated year_month_day , we can pass a dictionary instead.

df = pd.read_csv('information/data_4.csv',

parse_dates={ 'date': ['year', 'month', 'day'] })

df.info() If your date column is in a different format, and then you lot tin can customize a appointment parser and laissez passer it to the argument date_parser:

from datetime import datetime

custom_date_parser = lambda x: datetime.strptime(x, "%Y %m %d %H:%One thousand:%S") pd.read_csv('data/data_6.csv',

parse_dates=['date'],

date_parser=custom_date_parser)

For more than about parsing date columns, please check out this commodity

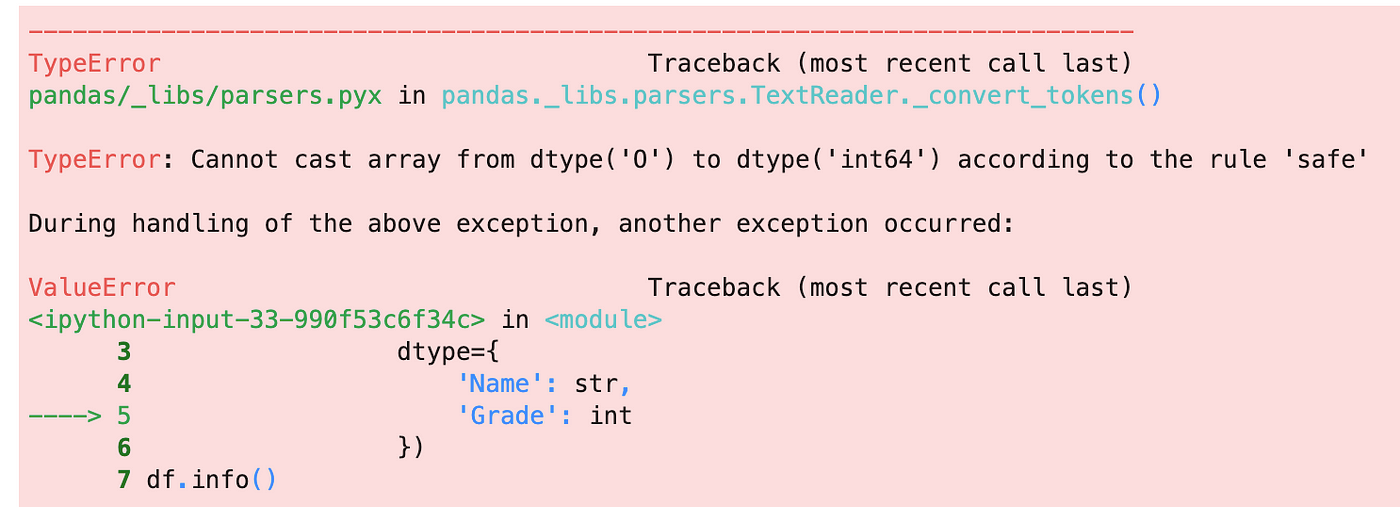

v. Setting data type

If yous want to fix the data blazon for the DataFrame columns, you can use the argument dtype , for case

pd.read_csv('information/data_7.csv',

dtype={

'Proper noun': str,

'Grade': int

}) vi. Finding and locating invalid values

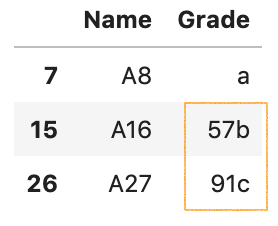

You might get the TypeError when setting information type using the argument dtype

It is always useful to discover and locate the invalid values when this error happens. Here is how you can find them:

df = pd.read_csv('data/data_8.csv') is_error = pd.to_numeric(df['Course'], errors='coerce').isna() df[is_error]

seven. Appending data to an existing CSV file

You tin can specify a Python write mode in the Pandas to_csv() function. For appending data to an existing CSV file, we can apply mode='a':

new_record = pd.DataFrame([['New name', pd.to_datetime('today')]],

columns=['Name', 'Date']) new_record.to_csv('data/existing_data.csv',

manner='a',

header=None,

index=Simulated)

eight. Loading a huge CSV file with chunksize

By default, Pandas read_csv() function volition load the entire dataset into memory, and this could be a memory and functioning issue when importing a huge CSV file.

read_csv() has an argument called chunksize that allows you to retrieve the data in a same-sized chunk. This is specially useful when reading a huge dataset every bit part of your data scientific discipline project.

Let's take a look at an example beneath:

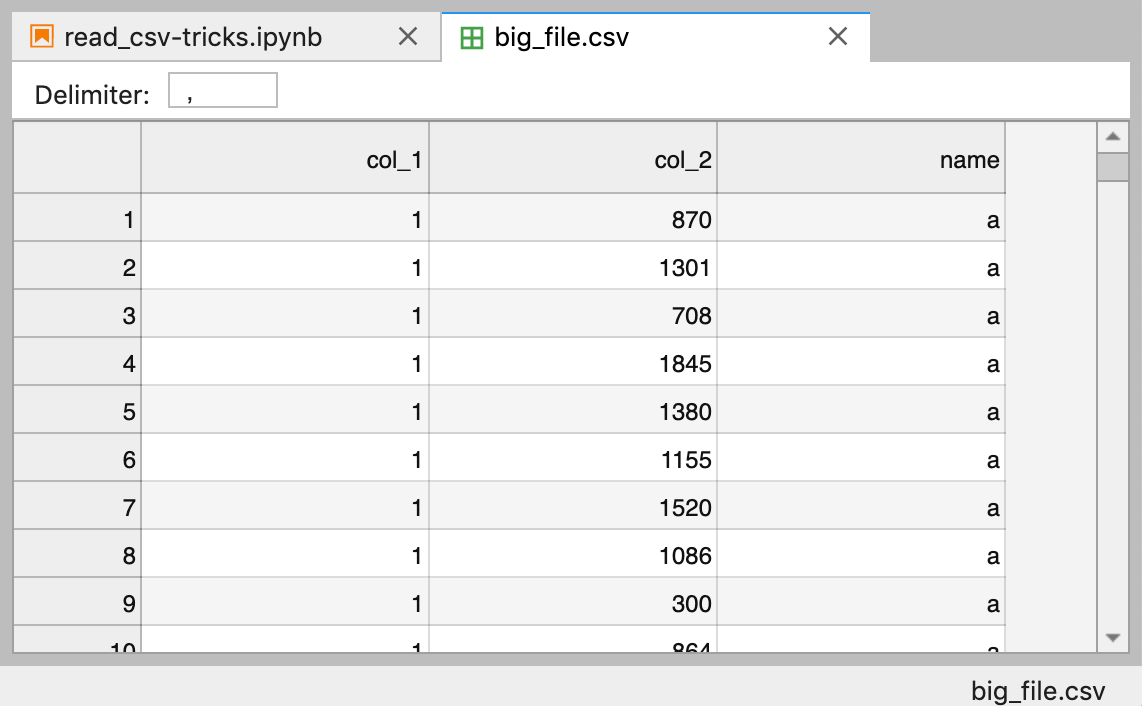

Starting time, let's make a huge dataset with 400,000 rows and save it to big_file.csv

# Brand up a huge dataset nums = 100_000 for name in 'a b c d'.split up():

df = pd.DataFrame({

'col_1': [1]*nums,

'col_2': np.random.randint(100, 2000, size=nums)

}) df['name'] = name

df.to_csv('information/big_file.csv',

manner='a',

alphabetize=Fake,

header= name=='a')

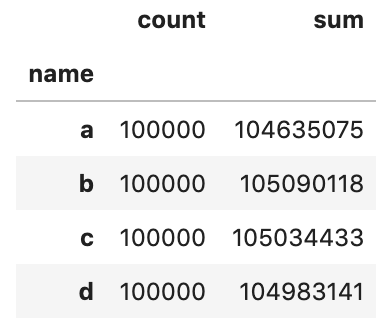

Next, let's specify a chucksize of 50,000 when loading information with read_csv()

dfs = pd.read_csv('data/big_file.csv',

chunksize=50_000,

dtype={

'col_1': int,

'col_2': int,

'proper name': str

}) Allow'southward perform some aggregations on each chunk and and so concatenate the outcome into a single DataFrame.

res_dfs = []

for chunk in dfs:

res = chunk.groupby('name').col_2.agg(['count', 'sum'])

res_dfs.suspend(res) pd.concat(res_dfs).groupby(level=0).sum()

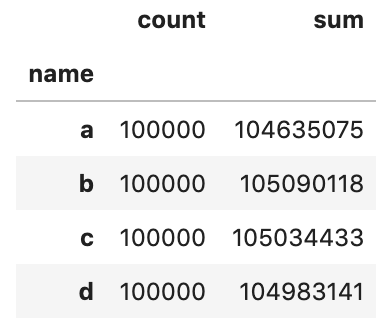

Let's validate the result against a solution without chunksize

pd.read_csv('data/big_file.csv',

dtype={

'col_1': int,

'col_2': int,

'proper name': str

}).groupby('proper noun').col_2.agg(['count', 'sum']) And y'all should get the same output.

That's it

Thanks for reading.

Please checkout the notebook on my Github for the source code.

Stay tuned if you are interested in the practical attribute of automobile learning.

Here are some related articles

- 4 tricks you should know to parse date columns with Pandas read_csv()

- 6 Pandas tricks you should know to speed up your data analysis

- 7 setups you should include at the beginning of a data science projection.

References

- [1] Kaggle Data Cleaning: Character Encoding

Source: https://towardsdatascience.com/all-the-pandas-read-csv-you-should-know-to-speed-up-your-data-analysis-1e16fe1039f3

0 Response to "Data Types While Reading Csv in Pandas"

Post a Comment